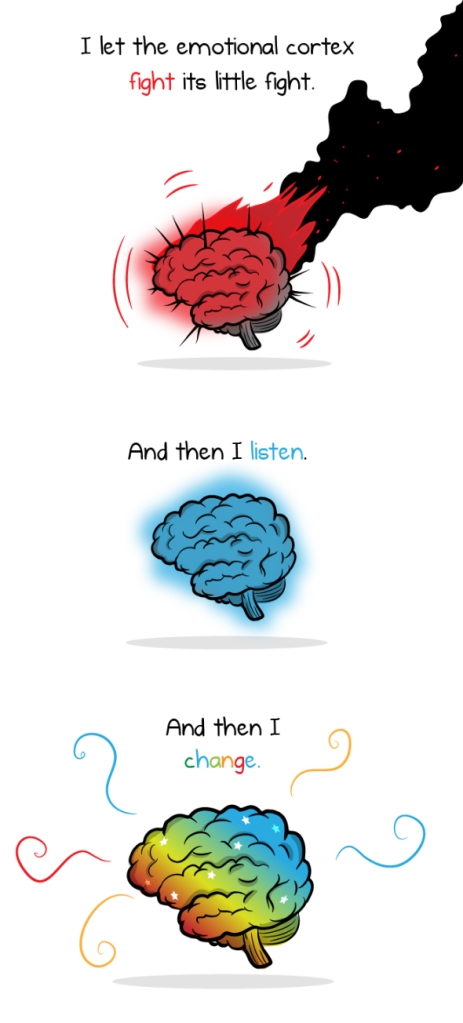

Does dialogue work to harmonise conflicting views, or does it simply entrench differences? According to extensive research in the psychology of polarised opinion, the answer is discouraging: when people of any ideological stripe encounter opposing views and evidence, their beliefs grow even more divergent.1 Hearing from the other side seems to make people double down on their original positions. The Oatmeal’s compelling comic You’re Not Going to Believe What I’m About to Tell You explains the friction involved in changing beliefs:

Why do we not only ignore evidence counter to our beliefs, but dig our heels in deeper and believe more strongly in the opposing argument? Why would providing more evidence make someone less likely to believe in an idea? It’s called the backfire effect, and it’s a well-documented psychological behaviour. It makes us biologically wired to react to threatening information the same way we’d react to being attacked by a predator. It’s a biological way of protecting a worldview.

The backfire effect is a source of frustration for those of us dedicated to fostering understanding in an ideologically divided world. If coming face to face with contrasting positions makes people simply hunker down, it’s a dead-end strategy. But the alternative is equally unpromising: if people remain in their respective echo chambers, cut off from opposing points of view, they’ll develop a sense of ‘false consensus’ –– an assumption that everyone shares their own views –– and they’ll never recognise (much less admit to) the possibility of being wrong.

We’re in quite a predicament, caught between the Scylla of defensive self-justification, and the Charybdis of blind mutual reinforcement.

It’s hazardous –– but it’s not hopeless. We can open ourselves to different points of view, and help others do the same; we just need to cultivate the right dispositions. We need to want to escape our echo chambers, and we need to want to engage constructively with people who may appear to be our adversaries.

Art by Mouni Feddag

Here are some avenues for motivating these powerful desires –– and for overcoming the indifference, stubbornness, credulity and complacency that might otherwise stymie our attempts:

-

- Cherish rigorous thinking, and recognise that learning to reason and communicate better are valuable pursuits that are worthy of sustained effort.

- Be curious about other points of view. No matter whether your ultimate goal is to interrogate your own views or to persuade someone else, sincere enquiry is one of the most effective strategies. Kal Turnbull, founder of the Change My View subreddit, observes that success begins with “asking questions in a really productive way… genuinely trying to understand, first and foremost, what’s being said… [Y]ou should always start with the questioning, because either you learn enough to change your [own] view, or you learn enough to change [the other person’s] view.”

- Be open-minded, that is, be critically receptive to alternative possibilities, ready to consider them with fairness and impartiality, and inclined to follow arguments where they lead. Equally, be open to criticism, take new evidence into account, continually reassess the balance of evidence, and be willing to concede your claims or even your entire position.

- Be sceptical and discerning: habitually seek evidence, examine warrants and test arguments. Similarly, be vigilant about the assumptions and biases that might distort your own and others’ thinking. Bear in mind William James’ observation: “A great many people think they are thinking when they are merely rearranging their prejudices.”

- As the above points suggest, be self-correcting: humble enough to recognise your own fallibility, and courageous enough to act on it. Peter Ellerton puts it like this: “Embrace when you are wrong, explain why you might have come to the wrong conclusion and celebrate the fact that you are in a better position than before.”

- Make opportunities to think collaboratively in a group setting, in which each participant is responsible for making their thinking accountable to the others, and each stands to benefit from the others’ insights. To quote Peter Ellerton again, we need “a range of dispositions which make us want to not just make our position clear to others, but to make our reasons clear to others. If we don’t take the trouble to try and make the reasoning that is sensible to us also accessible and meaningful to other people, then that’s not public reasoning, it’s just a different kind of assertion.”

- De-escalate confrontation. Barry Lam points out that there’s a stronger tendency for dialogue to increase polarisation of opinion if the encounter is hostile or combative. If we hope to find common ground, we need to find ways of defusing aggression and promoting civility and respect.

- Take charge of your learning by understanding the purpose of enquiry and argument, and reflecting metacognitively on how you use these methods. Deanna Kuhn makes clear just how high the stakes are here:

People who know how they know all that they know to be true are in control of their own knowing. They understand what support must be in place to justify a claim and what kinds of counterevidence disprove it. They are thus open to change of belief in the face of new evidence and argument, but their beliefs do not fluctuate in reaction to every new influence. To be in control of their own knowing and thinking may be the most important way in which people, individually and collectively, take control of their lives.

………………………………….

Postscript, 12/12/2018

Thanks to Andrew Withy for drawing my attention to a number of studies indicating that the validity of the backfire effect has been called into question.

One replication study suggests that it may occur only under certain conditions, and that its effect size may not be as great as was initially supposed.

Another experimental study found that “that backfire is stubbornly difficult to induce, and is thus unlikely to be a characteristic of the public’s relationship to factual information. Overwhelmingly, when presented with [corrective] factual information … the average [person] accedes to the correction and distances himself from the inaccurate claim.”

A third paper, reporting on further empirical research, notes that prior studies “have reached conflicting conclusions about people’s willingness to update their factual beliefs in response to counter-attitudinal information” and that while some research supports the backfire effect in finding people “highly resistant to unwelcome information… other studies find that fact-checks or other forms of corrective information can partly overcome directionally motivated reasoning and reduce misperceptions”. For example, during the 2016 US presidential campaign, when misleading claims made by Donald Trump were corrected, this reduced belief in those claims even among Trump supporters. (Interestingly, attitudes toward Trump were not affected, suggesting that “corrective information can reduce misperceptions, but will often have minimal effects on candidate evaluations or vote choice”, and more generally, that “people may be willing to update their factual beliefs while interpreting the facts in a belief-consistent manner”.)

Postscript: In Nature (5 August 2021) Lee McIntyre has a short ‘World View’ article entitled Talking to science deniers and sceptics is not hopeless which argues that fears of backfire effects are overblown, and advice to listen and interact still stands. The author characterises the backfire effect as the finding that people sometimes embrace misconceptions more strongly when faced with corrective information, implying that pushing back against falsehoods is counterproductive. He goes on to pronounce this finding ‘irreproducible’, arguing “Even the researchers whose results were exaggerated to popularize this idea do not embrace it any more, and argue that the true challenge is learning how best to target corrective information” (citing Brendan Nyhan, Why the backfire effect does not explain the durability of political misperceptions). Rebuttals can be effective, says McIntyre:

Science deniers — whether on vaccines, evolution or climate — all draw on the same flawed reasoning techniques: cherry-picking evidence, relying on conspiracy theories and fake experts, engaging in illogical reasoning and insisting that science must be perfect. A landmark 2019 study (Schmid & Betsch, Effective strategies for rebutting science denialism in public discussions) showed that critiquing flawed techniques can mitigate disinformation… It is an axiom of science communication that you cannot convince a science denier with facts alone; most science deniers don’t have a deficit of information, but a deficit of trust. And trust has to be built, with patience, respect, empathy and interpersonal connections.

Postscript, 26/02/2021

Here’s an excerpt from Hugh Breakey’s paper ‘That’s unhelpful, harmful and offensive!’ Epistemic and ethical concerns with meta-argument allegations, a section entitled ‘Social Divisiveness’:

In an environment where levelling meta-argument allegations [allegations like ‘that’s unhelpful’, ‘that’s harmful’ and ‘that’s offensive’] is common, when people from different political persuasions argue there will be frequent violations of argumentational norms, regular conflict escalations and increasing opportunities for narrow-mindedness. Instead of people across political divides having the type of positive interactions that can draw them together (Chua 2018, 201), their attempts at dialogue lead to derailment and outrage. This context will then weaken the prospects for future constructive arguments, as the vulnerability, honesty and reciprocity required (Crawford 2009, 111–118) will be increasingly absent. As Keller and Brown (1968, 75) observe, “our communicative habits, as speakers, are moulded and shaped by the responses we get from listeners.”

In the longer term, differing views on offence and harm, and the eagerness to trade meta-argument allegations upon their basis, can create a disconnect or “empathy wall” (Hochschild 2016, 5) between two sides of politics, where they no longer talk—where they no longer know how to talk—to the other side. No side wants to engage with the other when this will just provide their opponents with an opportunity to say offensive and harmful things. Equally, no side wants to broach difficult topics when it is only a matter of time before the argument is utterly derailed, and the topic of conversation once again moves to heated allegations. Once this occurs, groups will tend to talk only to like-minded fellows, contributing to problems of political ‘bubbles’, groupthink and group polarisation, where contrary views are never aired and social dynamics lead to increasingly extreme positions (Peters 2019, 406).

I submit that most people who engage in political argument do not desire this outcome. They do not want a halt to genuine debate between arguers holding different opinions. What they want, I suggest, is for that debate to happen, but to take place on their terms, controlled by their views of what is offensive, and constrained by their views of what is harmful. But once we realize that the very differences that make us disagree on substantive policy will—except for the most obvious and immediate cases—also lead us to disagree on matters of offence and harm, we can appreciate that this wish is fanciful. If we value the capacity, as individuals and as a society, to get together and talk about our disagreements, then we must avoid using those very disagreements as a reason not to talk to each other. But meta-argument allegations do exactly this. They make agreement on fundamental areas of disagreement—on what is offensive, on what is unhelpful and harmful, and on where responsibility for either lies—a precondition for being able to talk about fundamental areas of disagreement.

Breakey concludes:

[There are] an array of epistemic and moral concerns that recommend “argumentational tolerance”—a principled wariness in employing meta-argument allegations. This virtue empowers us to respect important norms of ethical argument, value the goods that may be pursued through argument, avoid personal perils of bias and closemindedness, reduce conflict escalation, and contribute to a larger culture that is supportive of rational, inclusive debate. Argumentational tolerance thus warrants inclusion within existing lists of argumentational virtues and ethics (Thorson 2016, 363–365; Aberdein 2010, 171–175; Correia 2012, 231–237).

Postscript, 22/02/2024

In his paper What Does Public Philosophy Do? (Hint: It Does Not Make Better Citizens), Jack Russell Weinstein writes:

some in the argumentation theory community question whether we can ever persuade someone of anything; not to mention that discourse is always complicated by confirmation bias, belief persistence, and confabulation. When persuasion does happen, it is rarely the consequence of an editorial or a well-reasoned presentation…

I do not mean to claim that no one can be persuaded of anything ever, but rather that the stronger the conviction, the less argument-inspired any cognitive change will be. The possibility of persuasion diminishes radically in the political realm, or so a myriad of studies bear out.*

The mistake that Mill and others make is to assume that our political beliefs are predominantly rational, and that we must deemphasize the emotive components because they, somehow, have less moral worth. I think that attitude is built on an empirically false moral psychology and a problematic normative account of what matters intellectually. Furthermore, it seems to me that the more polarized a society is, the less rational persuasion can bridge difference…

[W]hile I must fight through those things that impair my attempts at objectivity—confirmation bias, conflicts-of-interest, brute selfishness, ignorance—I also have to trust myself enough to act on my political beliefs. Without such convictions, I am left with mere preference, inaction, or Stephen Colbert’s self-consciously absurd truthiness.

* For an overview of the research suggesting that rational argument reinforces political belief rather than changes peoples minds, see: Jarol Manheim, Strategy in Information and Influence Campaigns: How Policy Advocates, Social Movements, Insurgent Groups, Corporations, Governments, and Others Get What They Want (London & New York: Routledge, 2011), especially chapter three.

-

………………………………….

1 Refer to the Hi Phi Nation podcast episode Chamber of Facts, which inspired this blog post.

………………………………….

The Philosophy Club works with teachers and students to develop a culture of critical and creative thinking through collaborative enquiry and dialogue.

I think people want to end pseudo-debate or going through the motions of argument.

Thank you for your examination of the backlash effects based on some of the best philosophical evidence available to us.

Hi Adelaide, thanks for taking the time to read the postscript!